Jialu Zheng

A passionate UX designer with a keen eye for detail, dedicated to creating intuitive, user-centric digital experiences through empathetic design, innovative problem-solving, and a strong understanding of user behavior.

A passionate UX designer with a keen eye for detail, dedicated to creating intuitive, user-centric digital experiences through empathetic design, innovative problem-solving, and a strong understanding of user behavior.

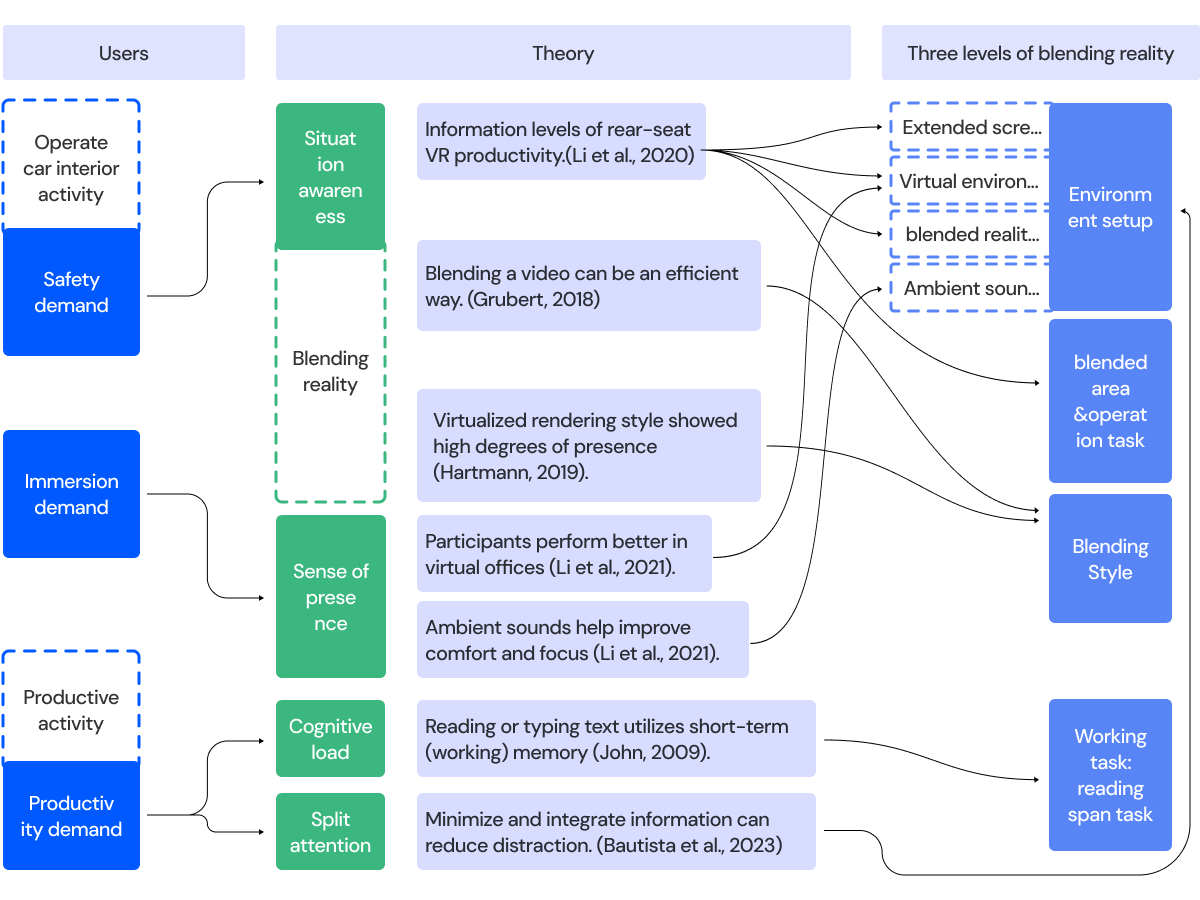

Aim: The project aims to assess the effectiveness of varying levels of Blending Reality Theory in in-car productive VR to improve productivity, immersion, and situational awareness for rear-seat tasks.

Context: In autonomous driving, people are likely to choose to work in the back seat of a car. The productive mobile VR has development potential in the future.

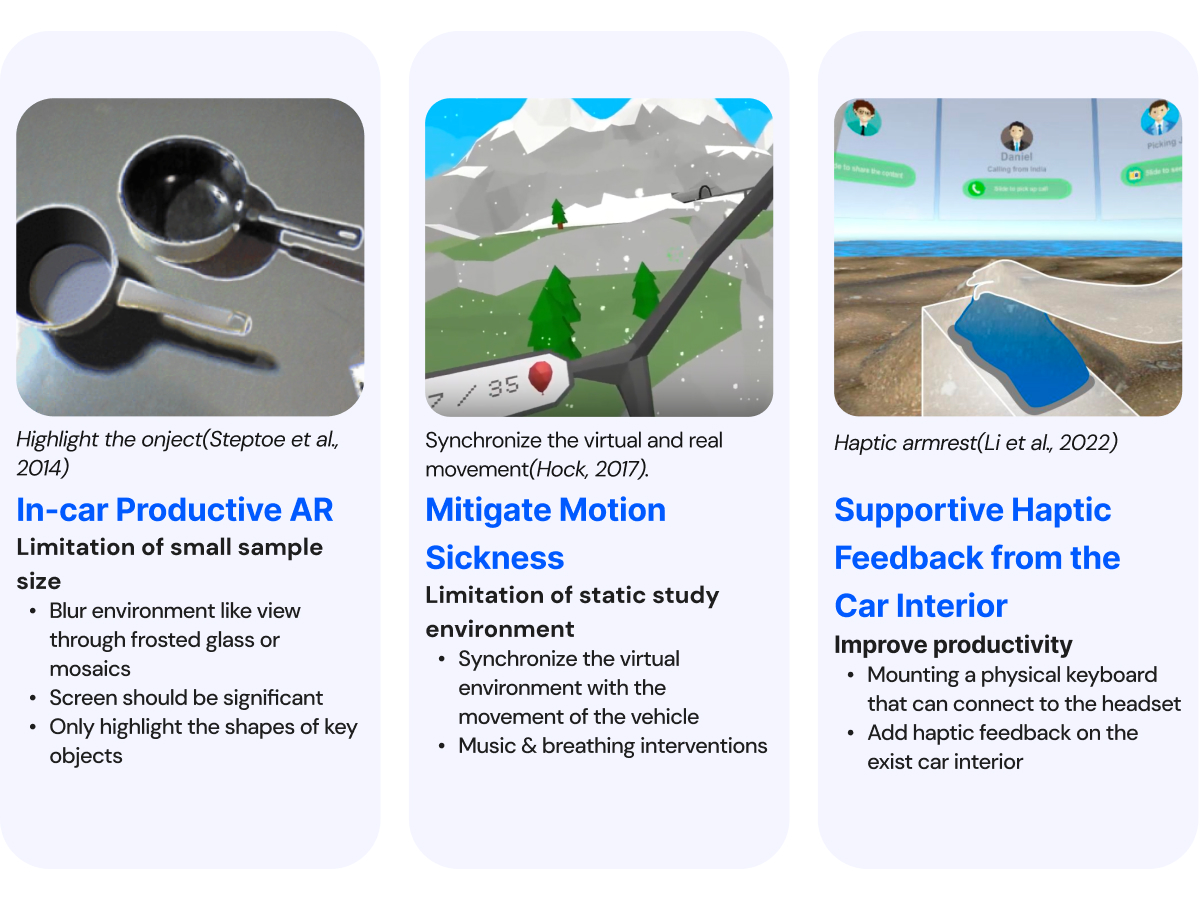

Challenges: The challenge is that the confined space limits interactivity (Brewster, 2022). Passengers are concerned about safety and hope to have situational awareness of HMDs (Li et al., 2021). Most prior practices tend to fully immerse commuters in a virtual environment. This reduces situational awareness, which means passengers feel unsafe and face usability challenges when working in VR.

Blending reality (BR) refers to the seamless integration of real-world and virtual elements to create a mixed environment where users can interact with both simultaneously. Prior studies had explored how the BR could balance immersion and awareness in mobile VR games and indoor VR working.

There are gaps here:

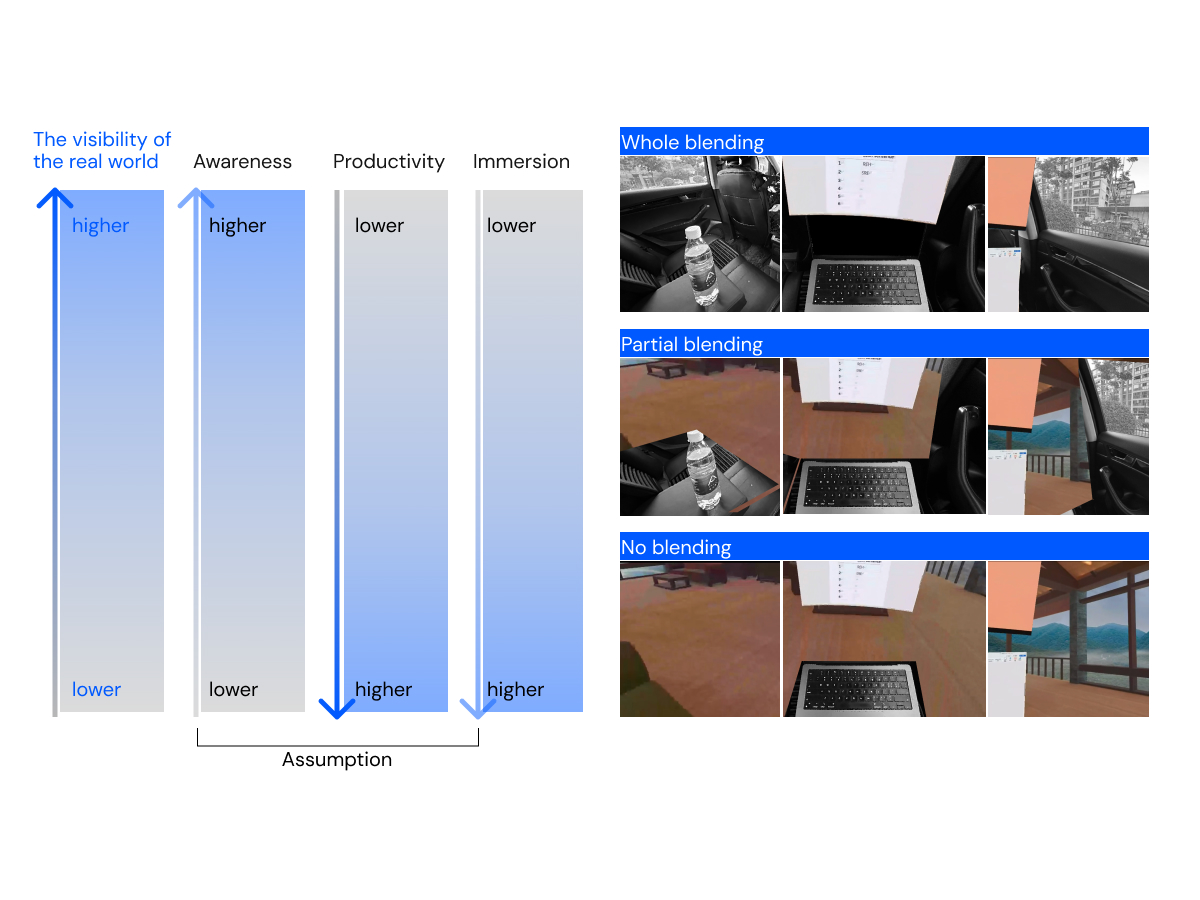

Hypothesis: The higher the level of blending, the higher the passengers’ experience of awareness, the lower productivity, and the lower the passengers’ immersion experience in the virtual world.

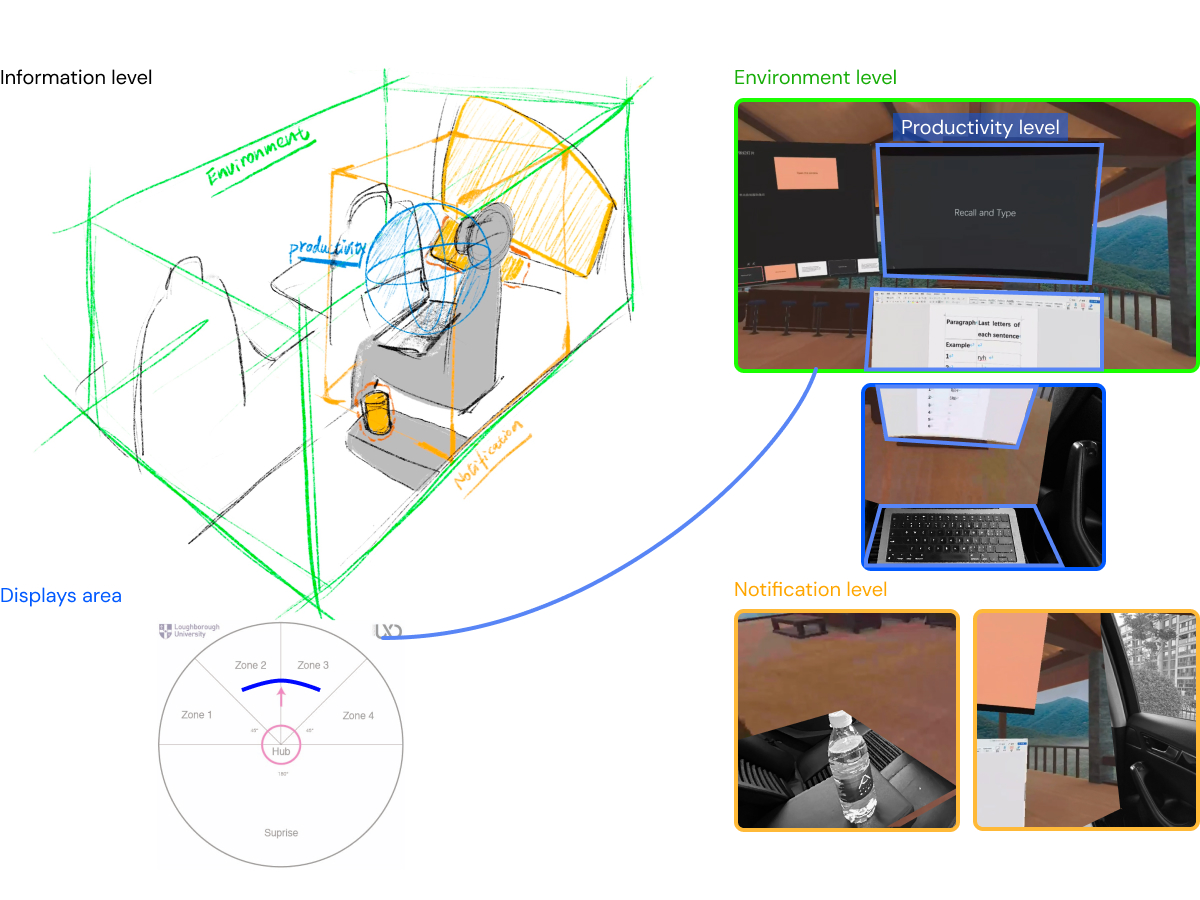

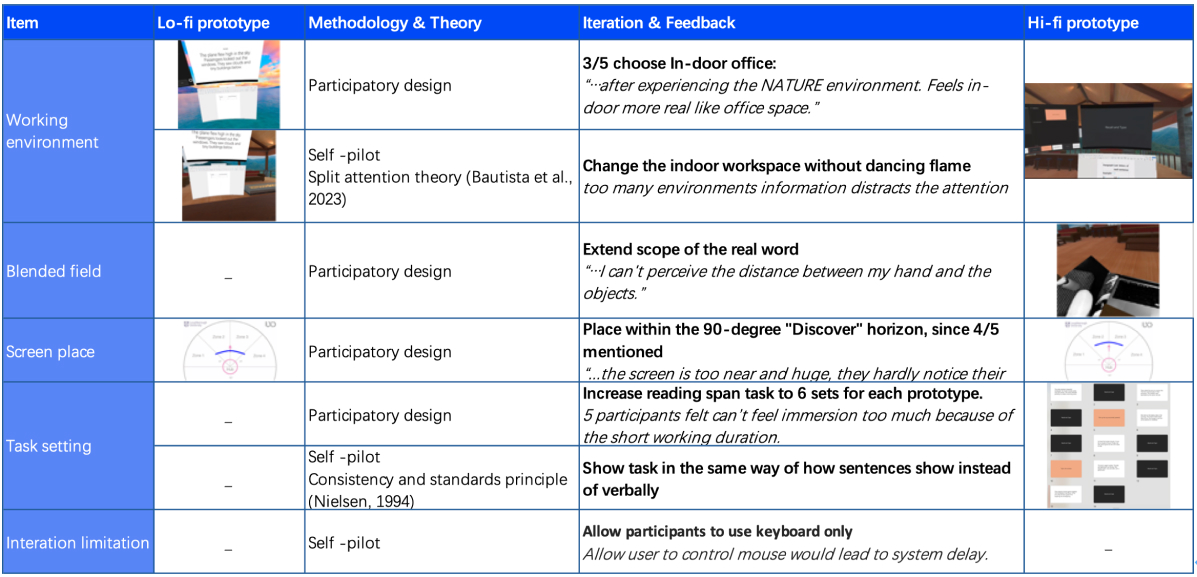

To verify the hypothesis, three prototypes were developed with different blending levels. Meta Quest 2 was combined with “Immersed” (Immersed, 2024) to create the prototypes. All the prototypes had two extended displays and ambient calm raining sound.

No blending: An indoor office where participants can see the natural view through the surrounding windows without blending any car interior.

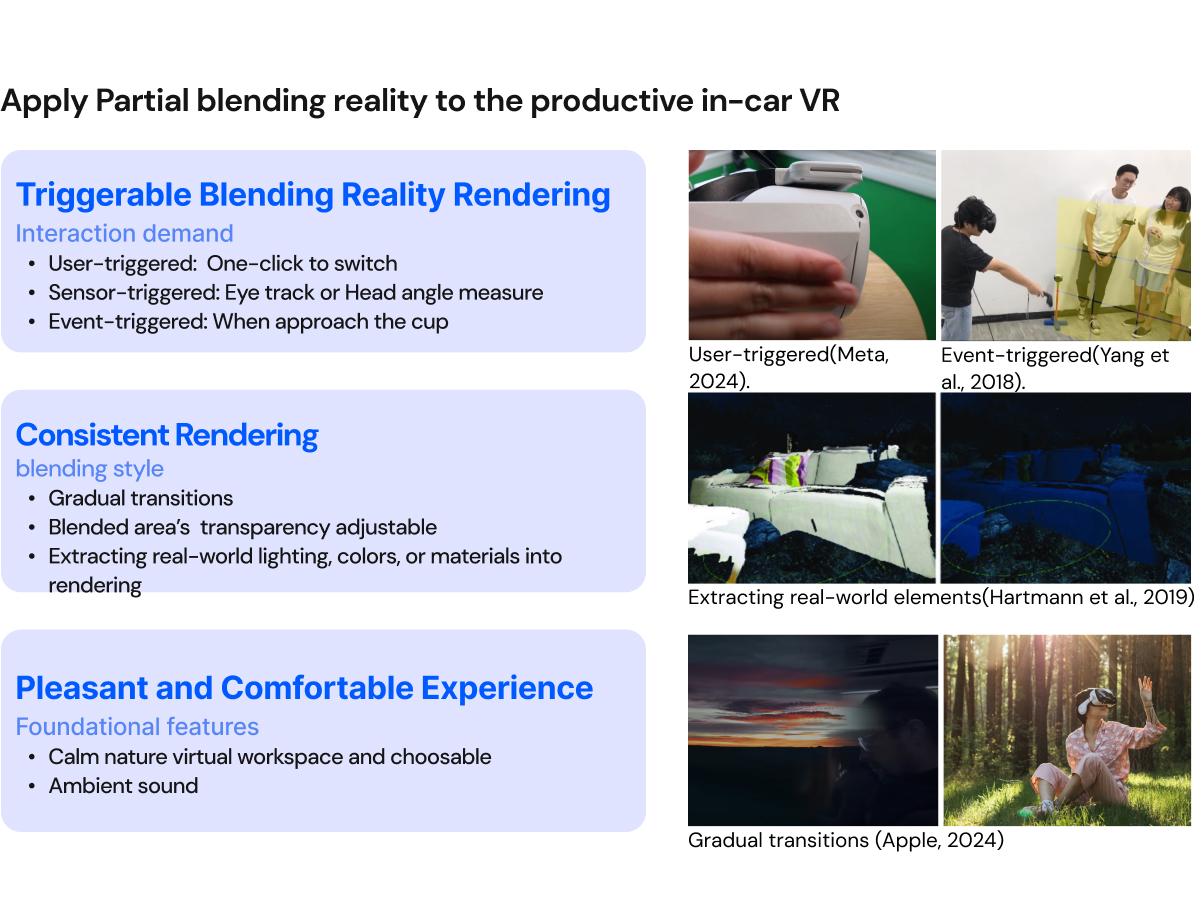

Partial blending: A virtual workspace mixing and mapping the essential car surroundings, such as the windows, armrests, and part of the rear seat.

Whole blending: Blending all the car interior into the virtual world.

Environment setup: Two extended displays cover a 90-degree range at the productivity level. The blended reality area renders critical elements like the armrest, cup, legs, keyboard, and right-side window. Users chose a virtual office with a scenic view, and ambient rain sounds are included to enhance comfort and focus, as suggested by Li et al.

Rendering style: The virtualized rendering style showed high degrees of presence and awareness(Hartmann, 2019). This rendering style shows the car interior in “black and white” without lots of detail. This is the most feasible and lowest-cost way to maintain usability in VR under Meta Quest 2’s hardware conditions.

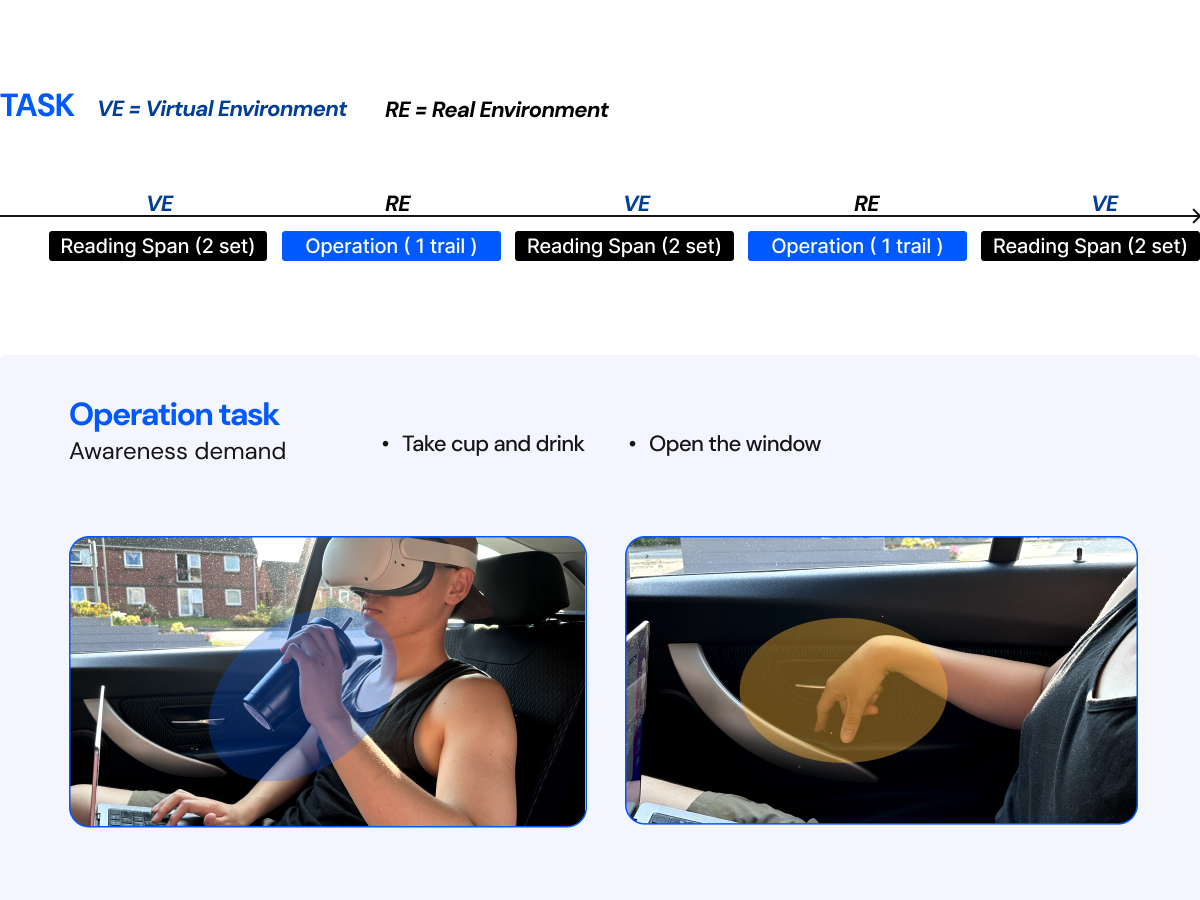

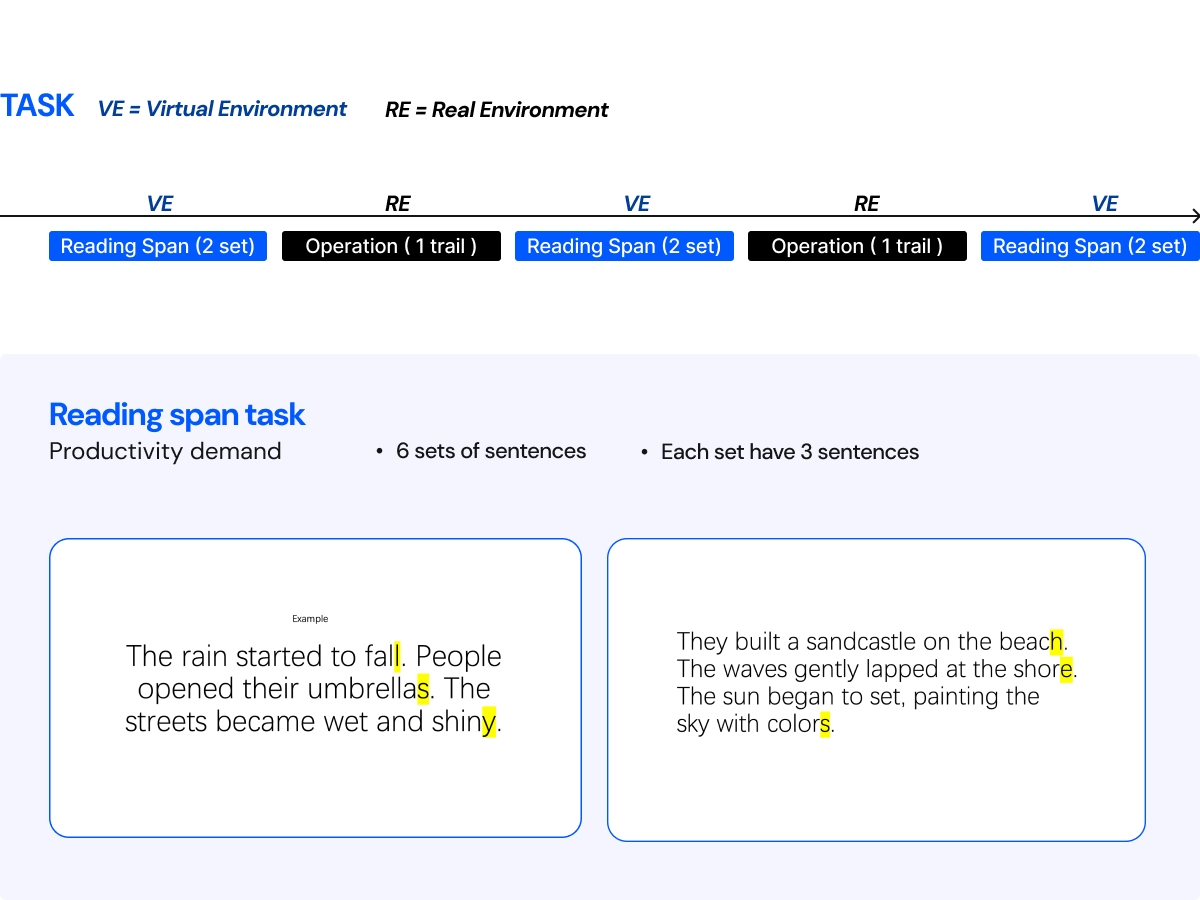

Operation task: According to “Information levels of rear-seat VR productivity” (Li et al., 2020), the operating tasks include opening windows and taking a cup to drink.

Working task: Applying Cognitive Load Theory to users’ common reading and typing activities shows that these activities use short-term (working) memory (John, 2009). Therefore, this experiment will include a reading span task to engage working memory, aligning with other productivity studies.

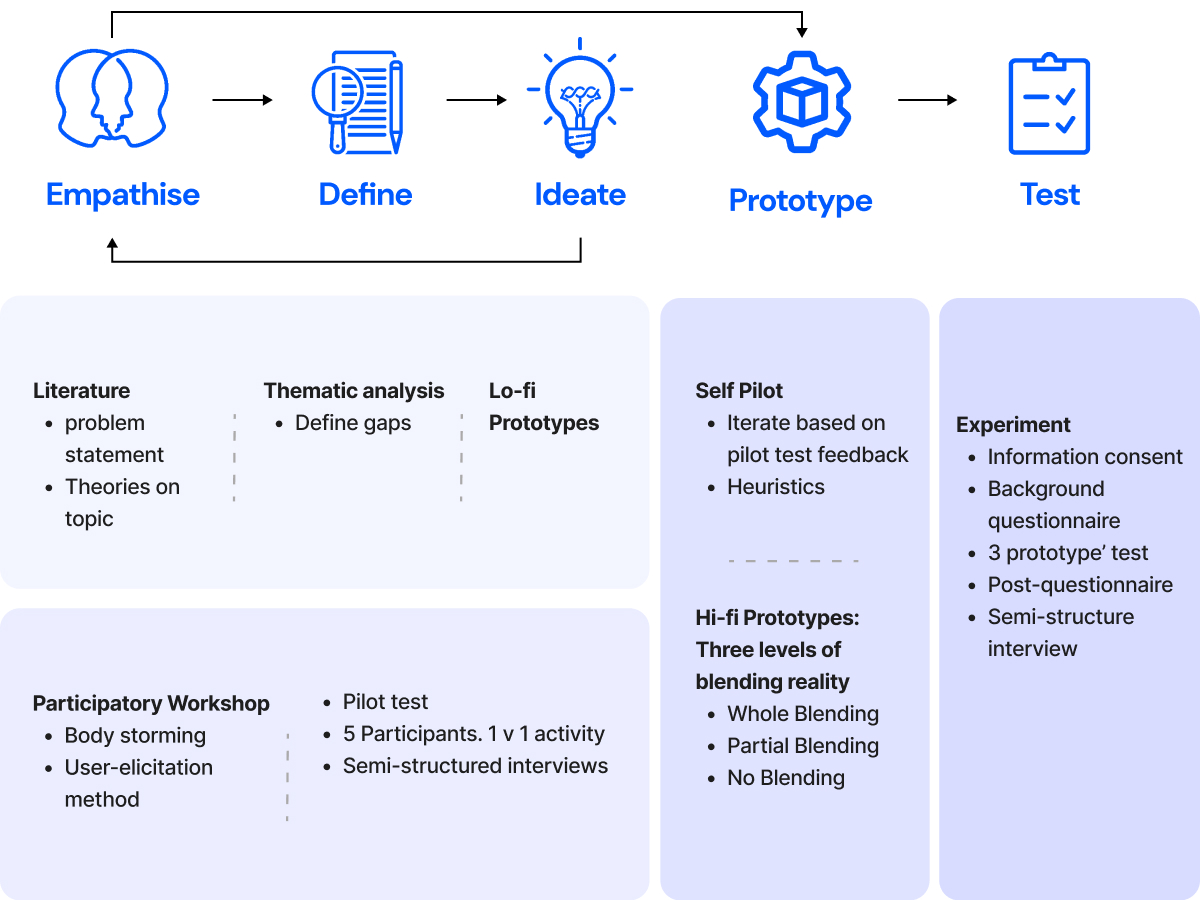

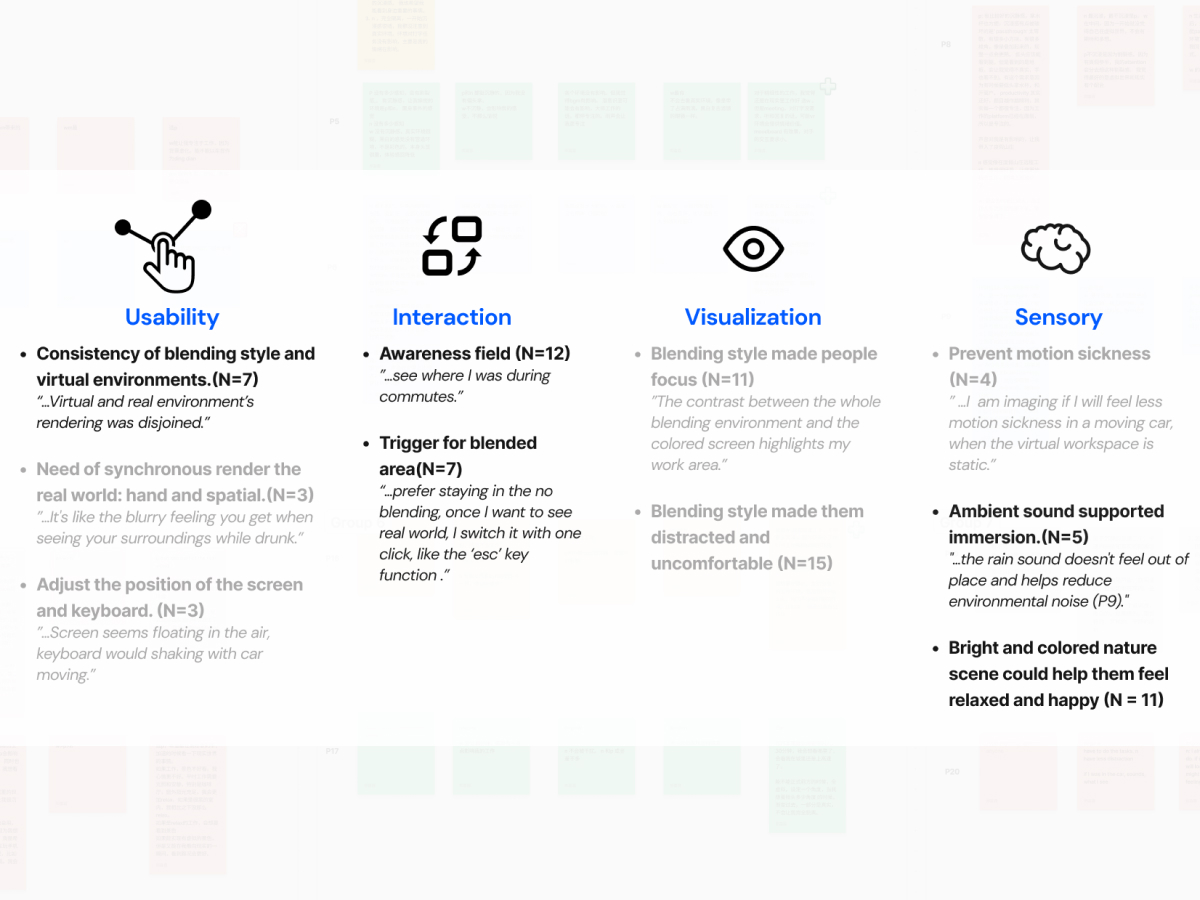

The participatory workshop, including body storming, helped foster participant empathy and validate user needs. Following the workshop, a pilot test was conducted to 1) validate test procedures, 2) refine tasks, and 3) estimate task duration. Finally, a self-pilot was performed, guided by heuristic principles, to assess usability and filter participants’ feedback.

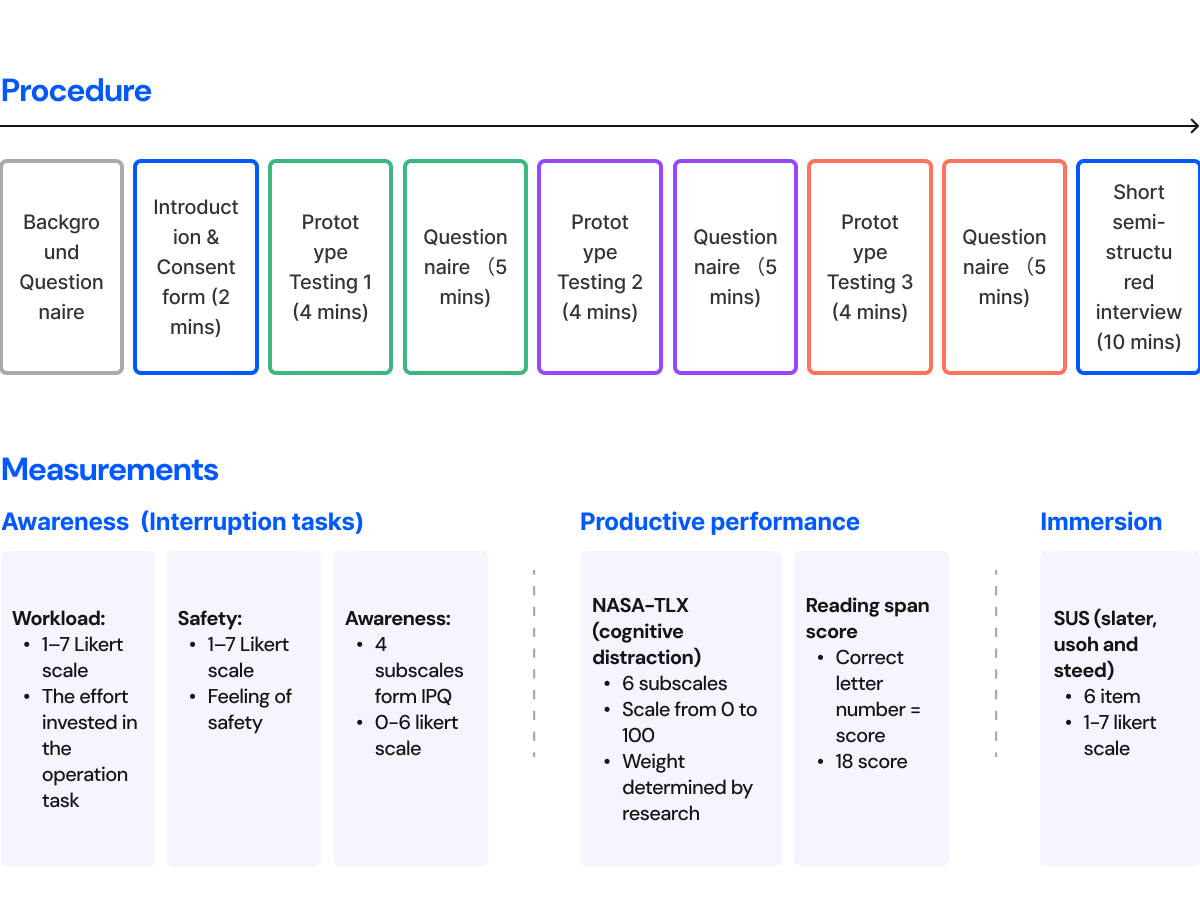

This research conducted a within-subjects experiment to investigate the effects of the blending level on productive performance, immersion, and situation awareness. The only independent variable is the blending level, and the data to be collected includes preference, awareness, productive performance, and immersion. Please refer to the diagram below for details.

Twenty-one participants were involved, and they all had experienced VR before. Only 19% of them had never worked while commuting.

Most of the study took place in a confined environment using tables, chairs, and cardboard boxes to simulate a car’s rear seat. Five experiments were conducted in a stationary BMW 318d.

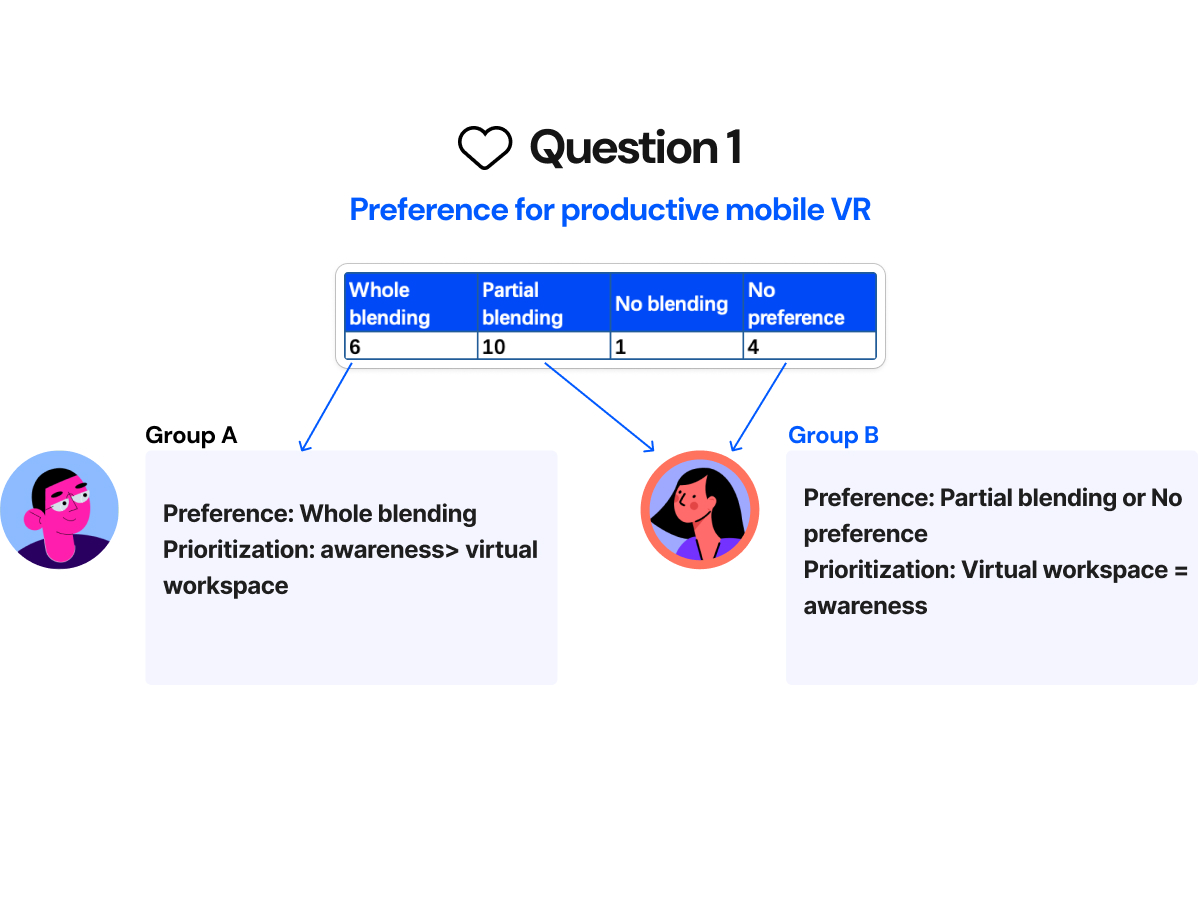

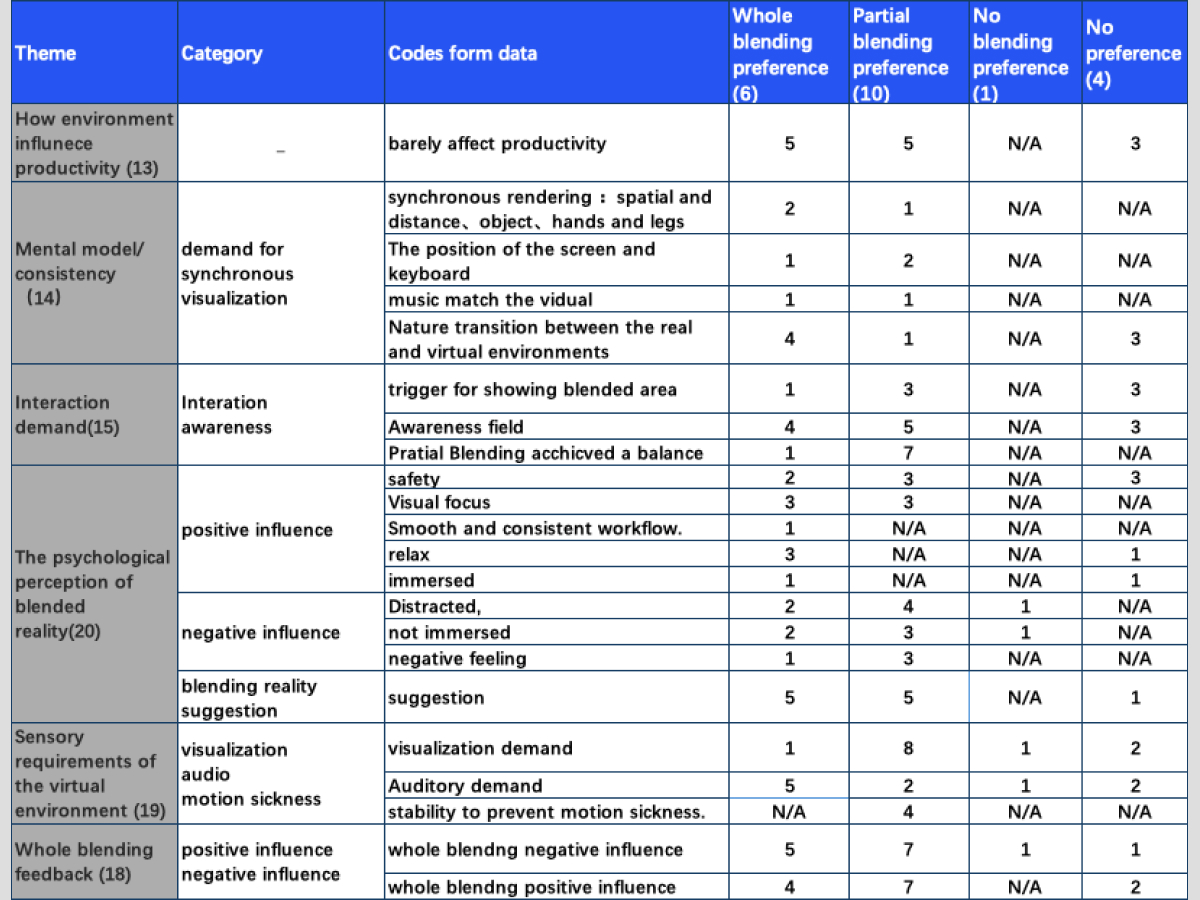

Two user groups were categorized based on their feedback. This study collected more data from Group B and further explored implications based on the data from Group B.

Group A: 1) feels unsafe; 2)prioritizes safety; 3) dissatisfied with the current transition between virtual and real.

“The black-and-white world is also relaxing. it has already helped me blur out the background (P10).”

Group B: 1) enjoys the virtual environment while maintaining some awareness of the real world; 2) feels secure in the car; 3)supported by passthrough.

“Partial blending is the best, it allows me to see the real world, reducing fear, while the virtual world makes me feel more relaxed emotionally. The combination of both makes me feel more comfortable (P7).”

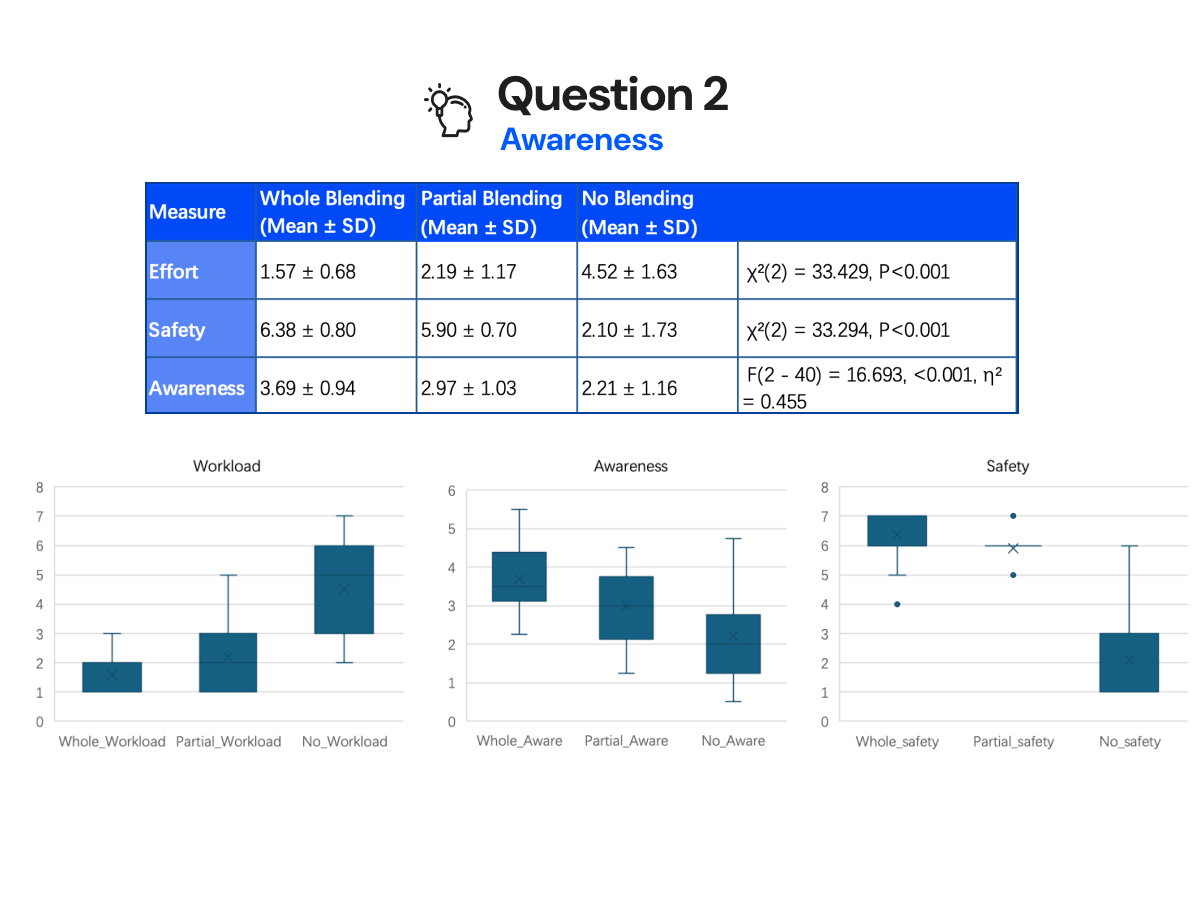

The significant differences between the varying blending level conditions for the three variables: (a) perceived workload: Chi-Square = 33.429, p< 0.001 ; (b) perceived safety: Chi-Square = 33.294, p < 0.001; (c) perceived awareness: F (2 – 40) = 16.693, p <0.001, Partial Eta Squared = 0.455.

There are no significant differences between the whole blending and partial blending conditions for the safety variable.

Safety: 1) Similar to the conclusions of RealityCheck; 2) Blending reality helped enhance passengers’passengers’afety (Hartmann et al. l, 2019). However, the feeling of safety in whole and partial blending is similar.

Awareness and workload: 1) Similar to the findings of RealityLens (Wang et al., 2022); 2) high level of blending better helped them perceive their surroundings and interact with the car’s interior.

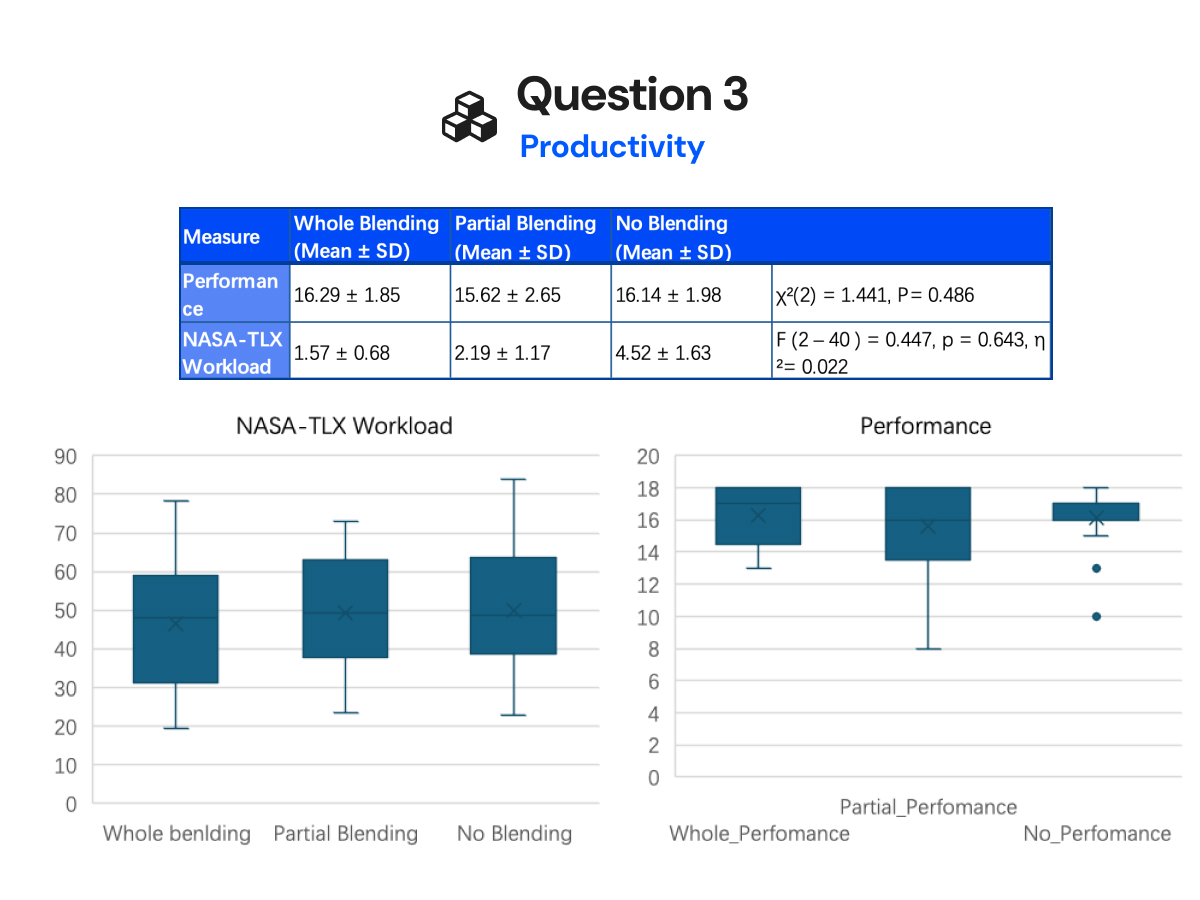

No significant difference between the conditions at the 0.05 level: (a) NASA-TLX Workload [F (2, 40) = 0.447, p = 0.643, Partial Eta Squared = 0.022]; (b) Reading span score (Chi-square = 1.441, p = 0.486)

Three blending levels reported fair high performance [ M (Whole) = 16.29, SD (Whole) = 1.85; M (Partial)= 15.62, SD (Partial) = 2.65; M (No) = 16.14, SD (No) = 1.98].

Lack of further research: The result aligns with Li et al.’s finding, but the independent variable differed from Li’s.

Interview results: Participants believed reading and typing tasks naturally demand concentration (N=13).

Limitation of the intense experimental environment: Participants might be more focused than every day working in the car.

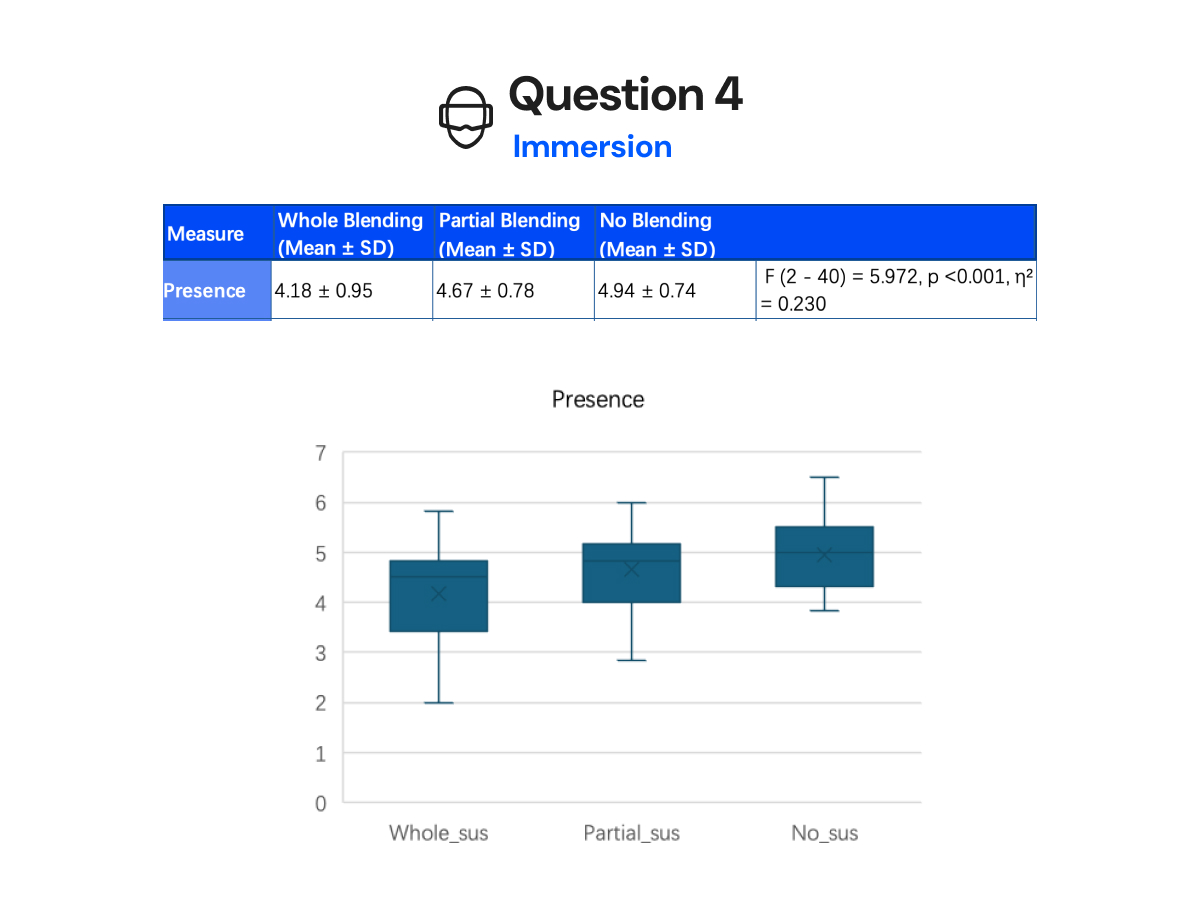

A statistically significant difference in presence across conditions [ F (2 – 40) = 5.972, p <0.001, Partial Eta Squared = 0.230] showed a small effect size for varying blending levels on presence.

No blending had the highest mean SUS score (M = 4.94, SD = 0.74), followed by partial blending condition (M = 4.67, SD = 0.78) and whole blending condition (M = 4.18, SD = 0.95).

Pairwise comparisons: Only whole blending differed significantly from no blending (p = 0.015).

This is consistent with Grubert et al.’s findings regarding the blending of video of the keyboard. A more specific result is that both whole and partial blending offered high immersion and presence.

konstanz.de%2Fentities%2Fpublication%2F5a678d5e-9405-4343-8bce-0f304bbe0fbb [Accessed 23 Aug. 2024].

Hartmann, J., Holz, C., Eyal Ofek and Wilson, A. (2019). RealityCheck. Human Factors in Computing Systems. doi:https://doi.org/10.1145/3290605.3300577.

Hock, P., Benedikter, S., Gugenheimer, J. and Rukzio, E. (2017). CarVR. Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. doi:https://doi.org/10.1145/3025453.3025665.

McGill, M., Boland, D., Murray-Smith, R. and Brewster, S. (2015). A Dose of Reality. Human Factors in Computing Systems. doi:https://doi.org/10.1145/2702123.2702382.

McGill, M., Kehoe, A., Freeman, E. and Brewster, S. (2020). Expanding the Bounds of Seated Virtual Workspaces. 27(3), pp.1–40. doi:https://doi.org/10.1145/3380959.

Meta (2023). Meta Quest 2: Immersive All-In-One VR Headset | Meta Store. [online] www.meta.com. Available at: https://www.meta.com/gb/quest/products/quest-2/.

Apple (United Kingdom). (n.d.). Apple Vision Pro. [online] Available at: https://www.apple.com/uk/apple-vision-pro/.

Wang, C., Chen, B. and Chan, L. (2022). RealityLens: A User Interface for Blending Customized Physical World View into Virtual Reality. doi:https://doi.org/10.1145/3526113.3545686.

Yang, K.-T., Wang, C.-H. and Chan, L. (2018). ShareSpace. doi:https://doi.org/10.1145/3242587.3242630.